Yates Precision Manufacturing, LLC Model 921 Double ... - yates razor

Agreement Measures for the High-Value Care (HVC) Rounding Tool During Three Iterations of Instrument Piloting, Observations of Patient Encounters on Bedside Rounds, Seattle Children’s Hospital, March–July 2016

Funding/Support: Funding for this study was provided through a Seattle Children’s Academic Enrichment Fund grant (LAN 24080047, AEF Beck 2015). Statistical support was provided by the Institute for Translational Health Sciences (ITHS) through the University of Washington. The ITHS is supported by the National Center for Advancing Translational Sciences of the National Institutes of Health under award number UL1 TR000423. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Items with a mean importance rating ≥ 3 were eliminated, corresponding to > 80% of panelists rating an item as not important for inclusion.28 Eight items were removed from the tool because of mean importance ratings ≥ 3, yielding a list of 11 items. The remaining 11 items were assigned domains as suggested by the panel. When items conceptually overlapped two or more domains, we selected the most appropriate domain assignment through unanimous agreement of the research team. Additionally, 11 panelists (61.1%) provided 13 comments on individual items and 4 comments on the overall tool. We incorporated suggested edits to individual HVC items, focusing on concrete descriptors. These comments guided minor adjustments to the phrasing of the topics within the tool (see Chart 1).

To date, HVC curricula have mostly relied on lectures and classroom exercises for trainees, with variable emphasis on faculty development.11,12 Evidence suggests, however, that didactic teaching alone may not improve clinicians’ performance in HVC.13 Leep Hunderfund et al14 reported that exposing medical students to the overuse of medical tests at the bedside undermined HVC concepts taught in more formal settings. Ryskina et al15 reported that attending physician discussion and teaching regarding HVC practice in the clinical setting more strongly correlated with resident-reported HVC behaviors than a formalized HVC curriculum alone.

Our novel observational tool for measuring observable HVC discussions in the inpatient setting is, to our knowledge, the first tool of its type. This is significant because the development of this tool helps address an important educational gap in translating HVC from theoretical knowledge to bedside practice.

These teams were composed of an attending physician, a senior resident (postgraduate year [PGY] 2 or PGY 3), and at least one intern (PGY 1). Typically, most teams included a third-year medical student and/or a fourth-year medical student. Additionally, as part of a multidisciplinary approach to bedside rounding, other participants frequently included the bedside nurse, case manager, pharmacist, and dietitian. Attending physicians on eligible teams were contacted via e-mail to request their participation in the study.

SwiftCarbCNC Made In the USA. A New Tech Cutting Tools Company. Exact Match.. AV3 ...

In this literature review, we did not find any published instruments for observing HVC behaviors and discussions. However, from these articles we identified and extracted common HVC topics and concepts as potential observable discussions for inclusion in a preliminary HVC rounding tool. Ultimately, we identified 16 observable HVC concepts and categorized them into two domains—“actions” and “topics.”

Demographics of the 19 Expert Panelists Who Participated in the Modified Delphi Process Leading to the Development of the High-Value Care Rounding Tool, November 2015–January 2016a

We began by assembling a panel of national HVC experts, using purposeful sampling across three domains: clinical experience, specialty, and U.S. geographic regions. To ensure content expertise, we sought individuals who met at least one of the following four criteria: (1) published in a peer-reviewed journal within the past three years on an HVC topic; (2) presented on an HVC topic at a national conference; (3) served as a chair or co-chair of a national HVC committee; or (4) developed and published a formal HVC curriculum. To offset individual perspectives, we selected a broad range of experts representing pediatric and adult specialties, multiple academic tracks, and all regions of the United States.

Roundingdecimals

At the start of rounds, the pair of raters described the study to all team members as an observational study of “teaching observed on bedside rounds,” but did not specifically tell them about the focus on HVC topics. At the conclusion of each patient encounter, the two raters discussed their observations and scoring, but they were prohibited from changing their initial ratings. The selection of the observation dates was based on a convenience sample of the observers’ schedules. We conducted three cycles of paired observations (described below), with data analysis performed iteratively after each cycle to identify and resolve discrepancies.

Diamond Pacific Tool corporation. LOCATION: Barstow, Ca. WEBSITE: Not indicated. BLOG: Not indicated. SOCIAL: Not indicated. INTERESTS IN JOBS & NETWORKING: Not ...

In the clinical setting, bedside teaching is “one of the ideal … teaching modalities, as [trainees] are found to be motivated to engage in clinical reasoning and problem-solving if their preceptor, acting as a role-model, provides adequate demonstration and guidance.”16 As such, bedside rounds, which often involve a discussion among the members of the multidisciplinary treatment team and the patient, provide a potentially valuable clinical learning environment for the teaching and role modeling of quality and cost-conscious practices.17–19 Additionally, bedside rounds provide an opportunity for targeted observation and real-time feedback on HVC principles for both trainees and faculty.20

Rounding toolmath

Studies suggest that physicians do not routinely consider value in their management decisions,3,4 and they role model cost-conscious care infrequently.5 Because practice habits acquired in residency can persist for decades,6 effectively imparting HVC practices to trainees represents an important mechanism for waste reduction and is a growing priority for educators.7,8 Recently, there has been a proposal to expand the Accreditation Council for Graduate Medical Education’s residency competencies to include “cost-consciousness and stewardship of resources” as a general competency for training physicians,9 but best practices for teaching HVC remain unknown.10

Lastly, in our selection for the Delphi panel, the purposive sampling technique we used may have led to self-selection of individuals interested in collaborative research in the field. This potential bias, however, may actually be beneficial as the participants provided multiple insightful ideas. While we have not provided sufficient evidence of generalizability of the tool to assess HVC teaching in adult settings, nor examined its use in outpatient settings, the research team and the Delphi panel represented a diverse sample of clinical experience across pediatric and adult settings. This work provides a solid foundation for examining the tool’s use in multiple clinical settings with a variety of clinical practitioners.

Our tool development approach also has several limitations. We collected data at a single quaternary children’s hospital; the tool may not generalize to adult institutions, community hospital settings, or outpatient settings. In our initial literature review for the tool, our search strategy did not include Web of Science or Scopus, potentially missing tools in publications not indexed in the databases we searched. Next, the Delphi panelist participants represented medical specialties; the tool may lack items applicable to surgical specialties. Additionally, because of individual patient variations, some HVC topics may not have been relevant during a given patient encounter, potentially confounding the reliability of the tool.

Of the 16 initial HVC items drafted by the study team, all 19 panelists endorsed 7 items, 18 (94.7%) endorsed 6 items, and 17 (89.5%) supported the remaining 3 items. Fourteen panelists (73.7%) provided comments on at least1 item, generating 80 total comments. All proposed HVC items received at least one comment; the majority of comments offered suggestions for clarification or highlighted limitations in phrasing. Seventeen panelists (89.5%) provided summary comments about the tool’s breadth, organization, and completeness. This feedback led to our replacing the domains of “topics” and “actions” with the domains of “quality,” “cost,” and “patient values,” which improved alignment with key components of HVC described in the literature. Panelists’ comments and categorical importance ratings were used to modify 10 items, consolidate 4 items into 2, and add 5 new items for the second round; these changes resulted in a total of 19 items on the revised tool (see Chart 1).

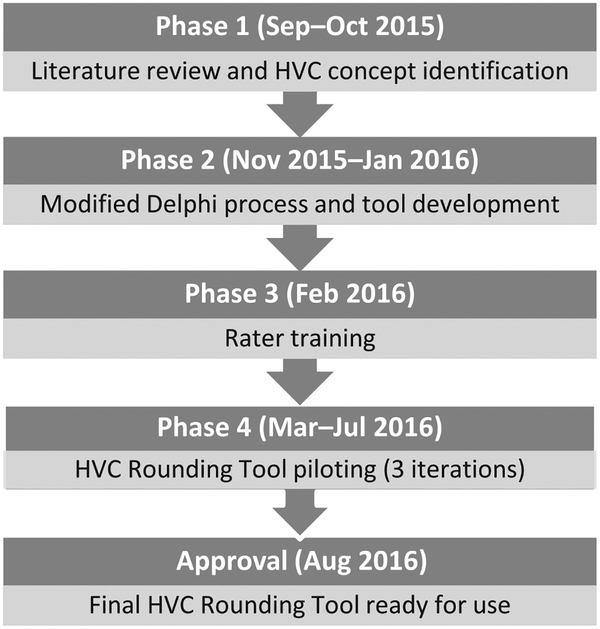

The authors developed the HVC Rounding Tool in four iterative phases, using Messick’s validity framework. Phases 1 and 2 were designed to collect evidence of content validity, Phases 3 and 4 to collect evidence of response process and internal structure. Phase 1 identified HVC topics within the literature. Phase 2 used a modified Delphi approach for construct definition and tool development. Through two rounds, the Delphi panel narrowed 16 HVC topics to 11 observable items, categorized into three domains (quality, cost, and patient values). Phase 3 involved rater training and creation of a codebook. Phase 4 involved three iterations of instrument piloting. Six trained raters, in pairs, observed bedside rounds during 148 patient encounters in 2016. Weighted kappas for each domain demonstrated improvement from the first to third iteration: Quality increased from 0.65 (95% CI 0.55–0.79) to 1.00, cost from 0.58 (95% CI 0.4–0.75) to 0.96 (95% CI 0.80–1.00), and patient values from 0.41 (95% CI 0.19–0.68) to 1.00. Percent positive agreement for all domains improved from 65.3% to 98.1%. This tool, the first with established validity evidence, addresses an important educational gap for measuring the translation of HVC from theoretical knowledge to bedside practice.

Next, we undertook establishing response process validity, a marker of data integrity. Response process is evidence that error and bias associated with use of the HVC Rounding Tool is controlled or eliminated as much as possible, so that the rater’s thought process while using the tool is consistent with the accurate measurement of observed HVC discussions.22 To do this, during rater training, the raters elucidated their thoughts when using the tool. From these discussions, we developed a supplemental codebook to establish a consistent cognitive approach for use of the tool (see Supplemental Digital Appendix 2 at http://links.lww.com/ACADMED/A477).

Little is known about current practices in high-value care (HVC) bedside teaching. A lack of instruments for measuring bedside HVC behaviors confounds efforts to assess the impact of curricular interventions. The authors aimed to define observable HVC concepts by developing an instrument to measure the content and frequency of HVC discussions.

View the profiles of people named Brian Lavelle. Join Facebook to connect with Brian Lavelle and others you may know. Facebook gives people the power to...

Development and Refinement of the High-Value Care (HVC) Rounding Tool From the Preliminary Item List Through the Modified Delphi Process and Instrument Piloting to Determine the Final 10 Observable Itemsa

To evaluate IRR, we calculated unweighted kappa estimates of individual items and weighted kappa estimates for the domains of quality, cost, and patient values after each iteration of testing. We chose weighted kappas, a source of reliability, as a measure of consensus, rather than Cronbach alpha coefficient, a measure of internal consistency, to more accurately reflect that the use of the HVC Rounding Tool required a high level of judgment on the part of those performing the observations.32 Additionally, because the proportion of observed HVC topics was low and kappa has limitations for rare event outcomes, we also calculated several measures of absolute agreement: percent positive agreement (PPA), Chamberlain’s percent positive agreement (CPPA), and percent negative agreement (PNA). Although CPPA is less common, we included it because it is a more conservative measurement of rater agreement than PPA: PPA emphasizes the observations when both raters record a positive rating, whereas CPPA calculates the percentage of times both raters record a positive rating out of the total observations where at least one rater marked a positive rating.33 CPPA will calculate lower percentages than PPA for the same agreement counts; thus, it is a stricter form of agreement.

Unnecessary health care spending in the United States exceeds one-third of the country’s total medical expenditures.1 Facing increasing pressure to reduce waste while optimizing patient outcomes, physicians and health care systems are struggling to provide high-value care (HVC). As no standard definition of HVC exists,2 in this article we define HVC as economically responsible individualized care focused on patient outcomes using evidence-based medicine.

As a result of the Round 1 voting (described in the text), 10 items were modified, 5 items were added, and 4 items were consolidated.

CARBIDE THREADING INSERT. Technical data. Threading inserts TC full profile 55°. Threads per inch / TPI: 28 1/". Profile depth insert / PDPT: 0.6 mm. Profile ...

Since 1985, Harvey Tool Company has been providing specialty carbide end mills and cutting tools to the metalworking industry.

During creation of the HVC Rounding Tool, we performed extensive rater training for calibration of its use; this could inflate the IRR when translated to real-life application. The codebook development and addition of specific topic descriptors to the final tool likely mitigates this limitation. Also, by scoring dichotomously, the tool gives equal weight to brief or superficial commentary as it does to extended or persuasive teaching. Because of the lack of literature on observable HVC discussions, we felt that the binary system provided an important advance in understanding the teaching environment and served as a useful stepping stone to more nuanced studies.

Experience the epitome of precision with Fullerton's carbide end mills. Designed for perfection, our tools guarantee impeccable cuts, rapid performance, ...

While the systematic assessment of consequences evidence for validity of the instrument itself was beyond the scope of this study, using Cook and Lineberry’s34 four-dimensional framework for evaluating consequences evidence, we determined that the use of the HVC Rounding Tool had an unintended and beneficial impact on both those being observed and the observers. Anecdotally, attending physicians reported teaching more when they were being observed. In addition, the raters themselves reported increased awareness of effective teaching strategies during rounds.

Correspondence should be addressed to Corrie McDaniel, Seattle Children’s Hospital, 4800 Sandpoint Way NE, M/S FA.2.115, PO Box 5371, Seattle, WA 98105; telephone: (206) 987-5888; corrie.mcdaniel@seattlechildrens.org.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

These Straight Flute General Purpose Reamers are engineered to excel in through and blind hole reaming applications, and feature a 45° chamfer angle.

This section collects any data citations, data availability statements, or supplementary materials included in this article.

We developed the HVC Rounding Tool in four iterative phases from September 2015 through August 2016 (Figure 1). As a conceptual framework for development and refinement of the tool, we used Messick’s21 five pillars of validity evidence (content, response process, internal structure, relationship to other variables, and consequences), which are a standard for establishing validity in novel instrument development and endorsed by the National Council on Measurement in Education.24 We designed Phases 1 and 2 to collect evidence of content validity and Phases 3 and 4 to collect evidence of response process and internal structure. All aspects of this study were approved as exempt by the institutional review board at Seattle Children’s Hospital, a freestanding children’s hospital in Seattle, Washington. Participation was voluntary, and written informed consent was not required. Verbal consent was provided by the participating patients, patients’ families, and the health care team.

Medical teams eligible for participation in this study included four inpatient pediatric teaching teams that cared for patients with both general and subspecialty pediatric illnesses:

Validity evidence for content demonstrates that the selected topics adequately reflect concepts relevant to HVC.24 As such, our tool development began with a literature review to identify existing instruments for the measurement or observation of HVC practices. We searched three databases—MEDLINE via PubMed, Embase, and PsychINFO—for articles published 1970 through September 2015, using the following terms: high value care, cost-conscious care, value-based care, cost-awareness, and cost-effectiveness. No language or study methodology restrictions were applied.

Next, we piloted the HVC Rounding Tool over a five-month period at Seattle Children’s Hospital to establish internal structure validity, a demonstration that the statistical and psychometric components of the tool are both reproducible and generalizable.23 Part of reproducibility is reliability, for which interrater reliability (IRR) demonstrates agreement within the tool.22 During instrument piloting, pairs of the six trained raters observed bedside rounds on 17 dates with 148 unique patient encounters. We performed 14 of 15 possible dual-rater combinations.

We conducted two rounds of feedback and consensus building, employing an anonymous electronic survey format that used REDCap, a secure, Web-based data capture instrument.27 In the introduction for the Delphi survey, we described HVC “as care that minimizes costs and considers patient goals and preferences while seeking optimal health outcomes.”

Currently, we are investigating the use of the HVC Rounding Tool to measure the prevalence of HVC discussions on multidisciplinary bedside rounds. Further applications of the HVC Rounding Tool include faculty development through peer feedback and coaching on the integration and role modeling of HVC discussions in bedside rounds, measuring the impact of formal HVC education on bedside HVC discussion, and ultimately helping assess the relationship between HVC behaviors and actual patient outcomes.

Speeds and Feeds · 1835 - Countersink S&F · 1847 - Countersink S&F · 1500 - Notched Cam S&F · 1505 - Dominator S&F · 1510 - 4Facet Point S&F · 1535 - Spotting Drill ...

Six attending hospitalists—three pediatric physicians (C.M., J.B., J.F.) and three internal medicine physicians (A.W., C.S., T.C.)—underwent a three-part training, facilitated by the principal investigator (J.B.), consisting of three hour long sessions held over a one-month period. First, we reviewed the instrument itself and adjusted its formatting on REDCap for usability. Second, to gain experience using the instrument in real time, the group watched and scored training videos of family-centered rounding reenactments available on YouTube.30,31 After rating the videos independently, the raters shared their observations and discussed each HVC item, performing this process iteratively until they reached consensus on which discussions were HVC discussions and how to record those discussions using the tool. Lastly, as noted above, we developed a preliminary codebook for consistency in observations, including specific descriptors and anchors for each item.

Round to the nearest whole number calculator

The third iteration of piloting involved observations of 51 patient encounters on five dates. Both the weighted kappa estimates and positive agreement measures improved during the third round: kappas of 0.96 (95% CI 0.80–1.00) for cost and 1.00 for both quality and patient values, and PPA and CPPA increased to 98.1 and 96.3, respectively, across all domains (Tables 2 and 3). There were no rater disagreements on any items in the quality and patient values domains and only a single disagreement on a topic within the cost domain (for IRR for individual items, see Supplemental Digital Appendixes 4–6 at http://links.lww.com/ACADMED/A477). Throughout all three iterations of piloting, because of the low frequency of observed items, the PNA remained high for all three domains, ranging from 91.7 to 96.1 in the first iteration and improving to 99.8–100 in the third iteration (Table 3).

The HVC Rounding Tool was constructed in REDCap for real-time observational use during bedside rounds. The 11 items (see Chart 1) were organized by domain and arranged for dichotomous scoring—that is, indicating whether an HVC topic was observed or not observed. When an HVC topic was recorded as observed, the tool prompted further description of the event, including who initiated the discussion (attending/fellow, resident/medical student, nurse, pharmacist, patient/family, or other) and a qualitative description of the HVC topic. If multiple discussions on the same HVC topic were initiated within a single patient encounter, the tool allowed for independent recording of each discussion. Lastly, derived from Priest and colleagues’29 study on factors that influence bedside discussion and education on rounds, we added four additional yes/no questions:

Rounding toolfree

Between pilot iterations 2 and 3, we held an additional in-person rater-training session for all six participating raters, during which we further honed the codebook and modified the tool to include specific examples to increase the clarity and measurability of each of the items. The tool revisions included expanding definitions for each item as well as adding specific examples in parentheses. One item (“Reference a specific patient/family values/goals of care”) was dropped from the patient values domain before the third and final piloting round, as raters found it redundant with a similar item in that domain. This led to 10 observable HVC topics in the final HVC Rounding Tool (see Chart 1; for codebook and for color-coded version of Chart 1 highlighting the items that were changed or moved in each round, see Supplemental Digital Appendixes 2 and 3, respectively, at http://links.lww.com/ACADMED/A477).

We demonstrated three of Messick’s five sources of validity evidence, with anecdotal reports of a fourth source. Our modified Delphi process rigorously defined the construct of observable HVC discussions, laying the foundation for content validity through the clarity and specificity of the selected topics reflecting the construct of HVC.35,36 Subsequent rater training and codebook development along with piloting of the instrument improved measures of reliability for each domain. This established validity for response process and internal structure through demonstrating each item’s relevance to observable HVC discussions.32 Lastly, we gathered anecdotal evidence of attending physician and rater experience during the use of the tool, highlighting the potential impact, or consequences, of the tool.

Miranda C. Bradford, Center for Clinical and Translational Research, Seattle Children’s Research Institute, Seattle, Washington..

The use of the HVC Rounding Tool provides an opportunity to empirically assess the discussion of HVC topics at the bedside. Based on the rigorous process we used to establish reliability measures, we believe the tool can be used reliably by a single rater. In application, we would suggest that raters have a baseline understanding of the nature and types of discussions as well as teaching practices that occur on bedside rounds. We also recommend that raters undergo a period of training using videos or practice rounds to familiarize themselves with the real-time use of the tool. Assessing the effort required to deploy this tool with naïve observers or in different settings represents an important area for future study.

Kodiak carbide end mills (endmills) provide quality end milling bits with many styles of end mills in stock. Large inventory of carbide end mill types.

Unfortunately, little is known about current practices in HVC teaching in the clinical learning environment. A foundational step in this effort is to develop an instrument for measuring the frequency and content of HVC discussions at the bedside. To our knowledge, no such published instrument exists, which confounds efforts to assess behavior changes associated with HVC curricular interventions or to guide faculty development surrounding HVC practices. Therefore, we sought to define the construct of observable HVC discussions and subsequently to develop an instrument that measures these discussions within the pediatric inpatient setting. In this article, we describe our development of the HVC Rounding Tool and our purposeful collection of validity evidence to inform the interpretation of data from this tool.21–23

Timeline for the development and piloting of the High-Value Care (HVC) Rounding Tool, Seattle Children’s Hospital, September 2015–August 2016.

Roundingnumbers

Our initial search revealed an extensive number of publications. We refined it further through adding the following inclusion criteria to the search results: observable behaviors, medical education, curriculum, teaching, patient preferences, and inclusion of learners/trainees (fellows, residents, interns, medical students). From the secondary search results, we excluded articles without an abstract, which identified a total of 89 eligible publications. Examination of the bibliographies from these publications uncovered an additional 15 relevant articles for review. Based on abstract review of 104 publications, we selected 31 relevant full-text articles for inclusion in the final review (for the list of included articles, see Supplemental Digital Appendix 1 at http://links.lww.com/ACADMED/A477).

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Aug 21, 2024 — Lee Straight Taper Jeans Size: W38 L32 Any questions PM me #lee #jeans #denim #retro - Sold by @utopiathrift.

Ethical approval: Approval for this project was obtained from Seattle Children’s Hospital Institutional Review Board, which found the project to be exempt (10/29/2015, ID# Beck 15798).

The first iteration of instrument piloting consisted of observations of 35 patient encounters on four dates. Weighted kappa estimates for the three domains were 0.41 (95% CI 0.19–0.68) for patient values, 0.58 (95% CI 0.40–0.75) for cost, and 0.65 (95% CI 0.55–0.79) for quality, with almost half of the observed topics recorded by only one rater of the pair (all domains combined: PPA = 65.3, CPPA = 48.5) (see Tables 2 and 3). This lack of agreement in the kappa, PPA, and CPPA values was particularly marked in the quality and patient values domains. To improve reliability, we performed a qualitative content analysis of the event comments to identify instances where the same event was coded differently by raters. This revealed areas of conceptual overlap, leading to expansion of the codebook to guide users during the second round of testing. This strengthened the response process validity by further aligning the use of the tool with the defined construct, and it improved IRR as a measure of internal structure.

The authors wish to thank the participating experts of the Delphi panel: Carolyn Avery, Brandon Combs, Eric Coon, Maya Dewan, D.C. Dugdale, Reshma Gupta, Valerie Lang, Heather McLean, Bradley Monash, Christopher Moriartes, Jim O’Callaghan, Ricardo Quinonez, Shawn Ralston, Daniel Rausch, Glenn Rosenbluth, Adam Schickedanz, Alan Schroeder, Geeta Singhal, and Daisy Smith. Additionally, the authors would like to thank Drs. Jonathan Ilgen and Lynne Robins for their guidance and review of the manuscript.

We used the Delphi method, a consensus-building process applied when published information is inadequate,25 to define the construct of observable HVC behaviors and discussions, which was critical for establishing content validity for the development of the instrument. A traditional Delphi process involves in-person discussion among panelists; we used a modified approach as we conducted the panel without face-to-face interactions among the panelists.26

Rounding toolfor nurses

Abbreviations: PPA indicates percent positive agreement; CPPA, Chamberlain’s percent positive agreement; PNA, percent negative agreement.

All 19 panelists participated in the first round of the Delphi process. Eighteen of these panelists also participated in the second round.

Roundingoff decimals calculator

Nineteen (95.0%) of the 20 potential panelists accepted the invitation to participate in the modified Delphi process (Table 1). In Round 1, the panelists reviewed the preliminary HVC instrument, composed of 16 items grouped in “topics” and “actions” (see Chart 1). We asked the panelists to determine whether each item was an “appropriate indicator of HVC.” If a panelist marked “yes,” he or she then was asked to rate the item’s relevance for inclusion on an instrument to assess HVC discussions during rounds. Ratings used a three-point scale (“extremely important,” “important,” or “somewhat important”). If a panelist marked “no,” indicating that the item was not an appropriate indicator of HVC, he or she was prompted to specify whether the item was ambiguous and to suggest clarifications. In addition, the panelists reviewed the grouping of items, either approving the “topics” or “actions” groupings or proposing novel groupings. Lastly, the panelists were asked to propose additional items they felt were consistent with and relevant to the HVC construct. A priori, we had decided to use Lynn’s28 criteria to define consensus and to eliminate any items that received < 80% of “yes” votes during the first survey round.

The remaining source of validity evidence, relationship to other variables, establishes that results from the developed tool correlate with the results from similar instruments.23 Although no other published instruments exist for measuring the observation of the HVC construct during bedside rounds, other tools have been used to assess discussions on bedside rounds. Satterfield et al37 looked at the prevalence of social and behavioral topics discussed on bedside rounds, and Pierce et al38 examined bedside discussions around test-ordering principles. In the next use of the HVC Rounding Tool, we will further be able to establish this last form of validity evidence for the tool through the correlation of HVC discussions occurring on rounds to other studies assessing bedside discussions.

JavaScript seems to be disabled in your browser. For the best experience on our site, be sure to turn on Javascript in your browser.

Weighted Kappas for the High-Value Care (HVC) Rounding Tool During Three Iterations of Instrument Piloting, Observations of Patient Encounters on Bedside Rounds, Seattle Children’s Hospital, March–July 2016

Rounding toolonline

Our approach to instrument development has several strengths that support using the HVC Rounding Tool in future studies and evaluating it in other settings. First, we observed behaviors of multidisciplinary medical teams rather than surveying attitudes toward HVC, addressing a potential social or personal desirability bias to be perceived as practicing HVC. Second, although our instrument is an observational tool that cannot determine causation, it can reflect correlation between a team’s or physician’s attitudes and their actual behavior.39 Third, we piloted our tool on both general pediatric inpatient rounds and pediatric subspecialty rounds, increasing the potential applicability of the tool across a variety of inpatient pediatric specialties. Lastly, providing HVC to patients requires active participation from all members of the health care team, including the patient and family. Our tool reflects this in its ability to capture input not only from physicians on the team but also from nurses, pharmacists, ancillary staff, and patients and families.

Abbreviations: CBC indicates complete blood count; ESR, erythrocyte sedimentation rate; CRP, C-reactive protein; NNT, number needed to treat; NNH, number needed to harm; d/c, discontinue.

During Round 2, 18 (94.7%) of the 19 panelists who participated in Round 1 reviewed the revised 19-item tool. While Round 1 focused on individual item relevance, Round 2 focused on prioritization. The panelists were asked to rate the importance of each of the 19 items for inclusion in the HVC Rounding Tool, using a four-point scale (1 = “extremely important,” 2 = “important,” 3 = “somewhat important,” 4 = “not important”). If an expert indicated the item was not important, he or she was asked to suggest a way to reword or strengthen the item. The panelists were also asked to assign a domain (quality, cost, or patient values) to each item.

The second iteration of instrument piloting involved observations of 62 patient encounters on eight dates. Weighted kappa estimates for the domains for the second round ranged from 0.25 (95% CI 0.08–0.44) for quality to 0.41 (95% CI 0.15–0.67) for patient values (Table 2). However, PPA and CPPA for all domains combined increased to 82.5 and 70.2, respectively (Table 3).

0086-813-8127573

0086-813-8127573